Tech

OpenAI taps iPhone designer Jony Ive to develop AI devices

On Wednesday, OpenAI announced that it had acquired the startup of iPhone designer Jony Ive, a big win for the company.

Ive's startup is called io, and the purchase price is nearly $6.5 billion, according to Bloomberg, which would make it OpenAI's biggest acquisition to date. The official announcement didn't contain much detail and mostly consisted of Altman and Ive gushing about each other.

"Two years ago, Jony Ive and the creative collective LoveFrom, quietly began collaborating with Sam Altman and the team at OpenAI. A collaboration built upon friendship, curiosity and shared values quickly grew in ambition. Tentative ideas and explorations evolved into tangible designs. The ideas seemed important and useful. They were optimistic and hopeful. They were inspiring. They made everyone smile. They reminded us of a time when we celebrated human achievement, grateful for new tools that helped us learn, explore and create…We gathered together the best hardware and software engineers, the best technologists, physicists, scientists, researchers and experts in product development and manufacturing. Many of us have worked closely for decades. The io team, focused on developing products that inspire, empower and enable, will now merge with OpenAI to work more intimately with the research, engineering and product teams in San Francisco."

Fortunately, an accompanying video posted on OpenAI's X page has more concrete information.

This Tweet is currently unavailable. It might be loading or has been removed.

There's plenty of gushing there too, but the gist is OpenAI is going to make AI-powered devices with Ive and his io team. The initiative is "formed with the mission of figuring out how to make a family of devices that would let people use AI to create all sorts of wonderful things," said Altman in the video.

Altman also shared that he has a prototype of what Ive and his team have developed, calling it the "coolest piece of technology the world has ever seen."

As far back as 2023, there were reports of OpenAI teaming up with Ive for some kind of AI-first device. Altman and Ive's bromance formed over ideas about developing an AI device beyond the current hardware limitations of phones and computers. "The products that we're using to deliver and connect us to unimaginable technology, they're decades old," said Ive in the video, "and so it's just common sense to at least think surely there's something beyond these legacy products."

Ive is famous for his work at Apple, where he led the designs for the iPod, iPhone, iPad, and Apple Watch. Steve Jobs even described Ive as his "spiritual partner."

OpenAI's move into hardware with a legendary designer, no less, shows the company has no signs of slowing down in terms of dreaming up new products. Just yesterday, Google launched a fleet of AI products, including XR hardware, indicating to some that it had caught up with OpenAI. But OpenAI just unlocked another new realm in AI competition. OpenAI says it plans to share its work with io and Ive starting in 2026.

Disclosure: Ziff Davis, Mashable’s parent company, in April filed a lawsuit against OpenAI, alleging it infringed Ziff Davis copyrights in training and operating its AI systems.

Tech

Hackers found a way around Microsoft Defender to install ransomware on PCs, report says

Windows users should think about reinforcing their antivirus software. And while Microsoft Defender should provide a line of defense against ransomware, a new report claims that hackers have found a way to get around the ransomware tool to infect PCs with ransomware.

A GuidePoint Security report (via BleepingComputer) found that hackers are using Akira ransomware to exploit a legitimate PC driver to load a second, malicious driver that shuts off Windows Defender, allowing for all sorts of monkey business.

The good driver that's being exploited here is called "rwdrv.sys,' which is used for tuning software for Intel CPUs. Hackers abuse it to install "hlpdrv.sys," another driver that they then use to get around Defender — and start doing whatever it is they want to do.

GuidePoint reported seeing this type of attack starting in the middle of July. It doesn't seem like the loophole has been patched yet, but the more people know about it, the less likely it is for the exploit to work against them, at least in theory.

In the meantime, allow our colleagues at PCMag to recommend some fine third-party antivirus software to you for your Windows PC. For more information on the latest Akira ransomware attacks — including possible defenses — head to GuidePoint Security.

Tech

ChatGPT fans are shredding GPT-5 on Reddit as Sam Altman responds in AMA (updated)

GPT-5 is out, the early reviews are in, and they're not great.

Many ChatGPT fans have taken to Reddit and other social media platforms to express their frustration and disappointment with OpenAI's newest foundation model, released on Thursday.

A quick glimpse of the ChatGPT subreddit (which is not affiliated with OpenAI) shows scathing reviews of GPT-5. Since the model began rolling out, the subreddit has filled with posts calling GPT-5 a "disaster," "horrible," and the "biggest piece of garbage even as a paid user."

Awkwardly, Altman and other members of the OpenAI team had a preplanned Reddit AMA to answer questions about GPT-5. In the hours ahead of the AMA, questions piled up in anticipation, with many users demanding that OpenAI bring back GPT-4o as an alternative to GPT-5.

What Redditors are saying about GPT-5

Many of the negative first impressions say GPT-5 lacks the "personality" of GPT-4o, citing colder, shorter replies. "GPT-4o had this… warmth. It was witty, creative, and surprisingly personal, like talking to someone who got you. It didn’t just spit out answers; it felt like it listened," said one redditor. "Now? Everything’s so… sterile."

Another said, "GPT-5 lacks the essence and soul that separated Chatgpt (sic) from other AI bots. I sincerely wish they bring back 4o as a legacy model or something like that."

Several redditors also criticized the fact that OpenAI did away with the option to choose different models, prompting some users to say they're canceling their subscriptions. "I woke up this morning to find that OpenAI deleted 8 models overnight. No warning. No choice. No "legacy option," posted one redditor who said they deleted their ChatGPT Plus account. Another user posted that they canceled their account for the same reason.

As Mashable reported yesterday, GPT-5 integrates various OpenAI models into one platform, and ChatGPT will now choose the appropriate model based on the user's prompt. Clearly, some users miss the old system and models.

Ironically, OpenAI has also drawn criticism for having too many model options; GPT-5 was supposed to resolve this confusion by streamlining the previous models under GPT-5.

Sam Altman responds to the criticisms

When Altman and the team logged onto the AMA, they faced a barrage of demands to bring back GPT-4o.

"Ok, we hear you all on 4o," said Altman during the AMA. "Thanks for the time to give us the feedback (and the passion!). We are going to bring it back for Plus users, and will watch usage to determine how long to support it."

Altman also addressed feedback that GPT-5 seemed dumber than it should have been, explaining that the "autoswitcher" that determines which version of GPT-5 to use wasn't working. "GPT-5 will seem smarter starting today," he said. Altman also added that the chatbot will make it clearer which model is answering a user's prompt. OpenAI will double rate limits for ChatGPT Plus users once the rollout is finished.

“As we mentioned, we expected some bumpiness as we roll out so many things at once. But it was a little more bumpy than we hoped for!” Altman said in the AMA.

GPT-5 is an improvement, but not an exponential one

Expectations for GPT-5 could not have been higher — and that may be the real problem with GPT-5.

Gary Marcus, a cognitive scientist and author known for his research on neuroscience and artificial intelligence — and a well-known skeptic of the AI hype machine — wrote on his Substack that GPT-5 makes “Good progress on many fronts” but disappoints in others. Marcus noted that even after multi-billion-dollar investments, “GPT-5 is not the huge leap forward people long expected.”

The last time OpenAI released a frontier model was over two years ago with GPT-4. Since then, several competitors like Google Gemini, Anthropic's Claude, xAI's Grok, Meta's Llama, and DeepSeek R1 have caught up to OpenAI on benchmarks, similar agentic features, and user loyalty. For many, GPT-5 had the power to reinforce or topple OpenAI's reign as the AI leader.

With this in mind, it's inevitable that some users would be disappointed, and many ChatGPT users have shared positive reviews of GPT-5 as well. Time may blunt these criticisms as OpenAI makes improvements and tweaks to GPT-5. The company has also historically been responsive to user feedback, with Altman being very active on X.

"We currently believe the best way to successfully navigate AI deployment challenges is with a tight feedback loop of rapid learning and careful iteration," the company's mission statement avows.

Disclosure: Ziff Davis, Mashable’s parent company, in April filed a lawsuit against OpenAI, alleging it infringed Ziff Davis copyrights in training and operating its AI systems.

UPDATE: Aug. 8, 2025, 3:20 p.m. EDT This story has been updated with Sam Altman's responses from the Reddit AMA.

Tech

YouTube will begin using AI for age verification next week

YouTube is officially rolling out its AI-assisted age verification next week to catch users who lie about their age.

YouTube announced in late July that it would start using artificial intelligence for age verification. And this week, 9to5Google reported that the new system will go into effect on Aug. 13.

The new system will "help provide the best and most age-appropriate experiences and protections," according to YouTube.

"Over the next few weeks, we’ll begin to roll out machine learning to a small set of users in the US to estimate their age, so that teens are treated as teens and adults as adults," wrote James Beser, Director of Product Management with YouTube Youth, in a blog post. "We’ll closely monitor this before we roll it out more widely. This technology will allow us to infer a user’s age and then use that signal, regardless of the birthday in the account, to deliver our age-appropriate product experiences and protections."

"We’ve used this approach in other markets for some time, where it is working well," Beser added.

The AI interprets a "variety of signals" to determine a user's age, including "the types of videos a user is searching for, the categories of videos they have watched, or the longevity of the account." If the system determines that a user is a teen, it will automatically apply age-appropriate experiences and protections. If the system incorrectly determines a user's age, the user will have to verify that they're over 18 with a government ID or credit card.

This comes at a time in which age verification efforts are ramping up across the world — and not without controversy. As Wired reported, when the UK began requiring residents to verify their ages before watching porn as part of the Online Safety Act, users immediately started using VPNs to get around the law.

Some platforms use face scanning or IDs, which can be easily faked. As generative AI gets more sophisticated, so will the ability to work around age verification tools. And, as Mashable previously reported, users are reasonably wary of giving too much of their private information to companies because of security breaches, as in the recent Tea app leak.

In theory, as Wired also reported, "age verification serves to keep kids safer." But, in reality, "the systems being put into place are flawed ones, both from a privacy and protection standpoint."

Samir Jain, vice president of policy at the nonprofit Center for Democracy & Technology, told the Associated Press that age verification requirements "raise serious privacy and free expression concerns," including the "potential to upend access to First Amendment-protected speech on the internet for everyone, children and adults alike."

"If states are to go forward with these burdensome laws, age verification tools must be accurate and limit collection, sharing, and retention of personal information, particularly sensitive information like birthdate and biometric data," Jain told the news outlet.

-

Entertainment5 months ago

Entertainment5 months agoNew Kid and Family Movies in 2025: Calendar of Release Dates (Updating)

-

Tech5 months ago

The best sexting apps in 2025

-

Tech6 months ago

Tech6 months agoEvery potential TikTok buyer we know about

-

Tech6 months ago

iOS 18.4 developer beta released — heres what you can expect

-

Politics6 months ago

Politics6 months agoDOGE-ing toward the best Department of Defense ever

-

Tech6 months ago

Tech6 months agoAre You an RSSMasher?

-

Politics6 months ago

Politics6 months agoToxic RINO Susan Collins Is a “NO” on Kash Patel, Trashes Him Ahead of Confirmation Vote

-

Politics6 months ago

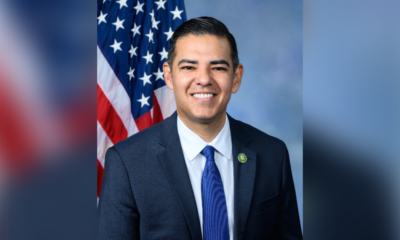

Politics6 months agoAfter Targeting Chuck Schumer, Acting DC US Attorney Ed Martin Expands ‘Operation Whirlwind’ to Investigate Democrat Rep. Robert Garcia for Calling for “Actual Weapons” Against Elon Musk